Better Inputs, Better Factories: What We Learned About Measuring Work

Industrial engineers spend 4-14 hours weekly recording GoPro videos, writing MODAPTS codes, and hunting for missing minutes in their routings. Here is what we learned from IEs at Tesla, GM, and dozens of other manufacturers about how time studies actually get done.

A month ago we set out to learn how industrial engineers, the people who keep factories on beat, actually spend their time and how we can help. Since then, we’ve spoken with industrial engineers across virtually every manufacturing sector, from automakers and Tier‑1 suppliers to electronics firms and even prefabricated construction goods manufacturers running automotive‑style lines for walls and roof trusses. We discovered how improvement really happens: an engineer on the floor with a phone or GoPro, observing a station for a minute at a time, writing MODAPTS or MTM codes back at a desk, updating routings when numbers drift, validating vendor cycle times at buy‑off, and tracking down the “missing minutes” that don’t appear in MES. The story was always the same, lots and lots of measurements taken and annotated by hand.

It’s A Lot of Manual Work

Across plants, time studies are still done with phones, GoPros, stopwatches, and spreadsheets.

“We go to the floor with a GoPro, follow hands for ~50 seconds, then watch the video many times to write the MODAPTS.” — IE at Tesla

“I’m using a phone stopwatch and a notepad. I walked 5,000 steps to diagnose one AMR routing issue.” — IE at GM

How much time does this consume? It varies by company and role. One advanced robotics integrator told us each member of his four‑person team spends 14 hours weekly writing MODAPTS plus 4–8 hours on time studies, with the rest of the week in buy‑off meetings. Their team uses these studies to decide whether a task is better off done by a person or by a robot, then later at acceptance to fine‑tune utilization by improving robot‑robot and robot‑human handoffs.

At another large automotive plant, an industrial engineer said it takes only 1–2 hours per person weekly, but at that single, very large location more than 100 people are involved in time and motion studies. They run continuously with hundreds of short‑cycle steps and are always hunting for a few seconds of improvement.

For some teams the workload is spiky. Another automotive site spends under 30 minutes per person weekly, leaning on OEE and controller data most of the time, then shifting hard into manual measurement during launches where everything has to be measured directly.

Because so many teams reported making measurements with GoPros or even their personal phone, we kept asking why not just put overhead cameras at every assembly station. At most plants, always‑on video pointed at people triggers immediate hesitation. Operators do not want to feel watched every second and there is strong fear of union pushback of anything that looks like “productivity surveillance.” There is also a practical side. Overhead cameras with fixed angles make sense in a stable environment, but real lines change all the time, especially at launches and ramp where time and motion studies are most valuable. Fixtures move, totes shift, glare comes and goes and a fixed solution stops fitting well. It is also why older computer vision approaches struggled, they wanted a rigid scene in a still fluid environment.

The Coming of Robots

“You really can’t beat the dexterity of people for a lot of these tasks.” — IE at Tesla

Even in highly automated plants, the fine, variable, and awkward motions are still handled by people. Over‑automating those stations often backfires. If your robotic first‑pass yield is bad, the rework can eat up all the advantages of the automation. The lesson most teams shared was to automate simple large motions and operations that were insensitive to small variations, while keeping dexterous and variable tasks with humans. When considering automating a line, consider both on-router timing and changes in rework.

Beyond measuring humans, several teams asked for machine‑side elemental breakdowns such as door or cylinder open, clamp, transfer, and robot dwell.

“We don’t get elemental from the PLC. I want two minutes of video and an elemental breakdown. Our site runs ~10 seconds or under.” — IE at GM

“I’ve always validated PLC data with a stopwatch. Once the program didn’t count dwell times. I’m watching the robot waiting for another robot to clear, but the system showed 100% utilization.” — IE at Midway

Small drifts multiply. Add 200 milliseconds to a 10‑second door‑open across 30,000 cycles in a day and you have quietly lost more than 100 minutes of capacity. If the bottleneck owns that step, the whole line feels it. Elemental timing turns a blended cycle into a set of distributions for door‑open, cylinder‑extend, robot‑dwell, and transfer. That lets you see exactly which piece is moving and by how much. This is also critical in acceptance testing, where variability can come not just from the robot itself but from how it interacts with other robots and humans on the line.

Simulation Models Look Great Until the Line Actually Runs

“The simulation is good… but it’s ideal. The human factor is the weakest point.” — IE at Cummins

“Who are we timing? The best person, the fastest, or the average one? It is better to have an average and get rid of the outliers.” — IE at HME

Variance is the missing character in many factory models, and you only see it when you pair standards with fresh observation. One Tier-1 supplier described writing MODAPTS across a multi-station line and then sampling real cycles to locate the spread, which sometimes hid in a long-reach part pick and other times in a finicky fixture engage. A single “standard time” is convenient for planning files and digital twins, but it rarely yields a reliable forecast because the shop floor shifts with staffing mix, option content, fixture condition, and aging equipment; that same drift helps explain why OEE so often stalls at mere 60 percent.

Closing the gap starts with better inputs rather than a bigger model. Capture current, observed times for the steps that actually gate throughput, refresh routings as those steps move, and feed the twin with recent empirical distributions for the bottleneck’s elemental events. Set buffers and staffing to match the measured spread, then re-measure after method changes, model launches, or maintenance. The model improves because the inputs improve, and the forecast begins to reflect the factory you actually run.

Try What We Built

We took all of this and built something small and practical. Record a short clip with a phone, a GoPro, or an overhead camera. Upload it. Get back coded output you can edit in seconds. You will see atomic motions mapped to MODAPTS, grouped worksteps, or elemental machine states like door‑open and robot‑dwell aggregated across cycles. Export two files, an atomic elements table and a workstep summary, that drop straight into the spreadsheets and planning templates you already use.

We are not asking anyone to install a camera grid or add a new scanning step. This is for targeted studies, faster buy‑offs, cleaner routings, and fewer surprises between the model and the floor. If you want to see it annotate your work, record a 60 to 90 second clip, human task or machine cell, and try us at app.materialmodel.com. If you need security details first, you can see exactly how the data flows, how long it is retained, and who can see it on our security page.

Related Articles

Don’t Undermeasure: How Cheap Annotation Changes the Tempo of Decision-Making

When measurement is expensive, planning becomes unreliable; automating time‑study annotation lowers the cost of fresh data so that every decision can be grounded in facts.

When KPIs Go Wrong: Goodhart's Law for Industrial Engineers

KPIs that boil down to “just do it faster” create rework, hidden factories, and fake wins on dashboards. But there is a better way that can help align everyone from operator to supervisor with what the factory actually needs.

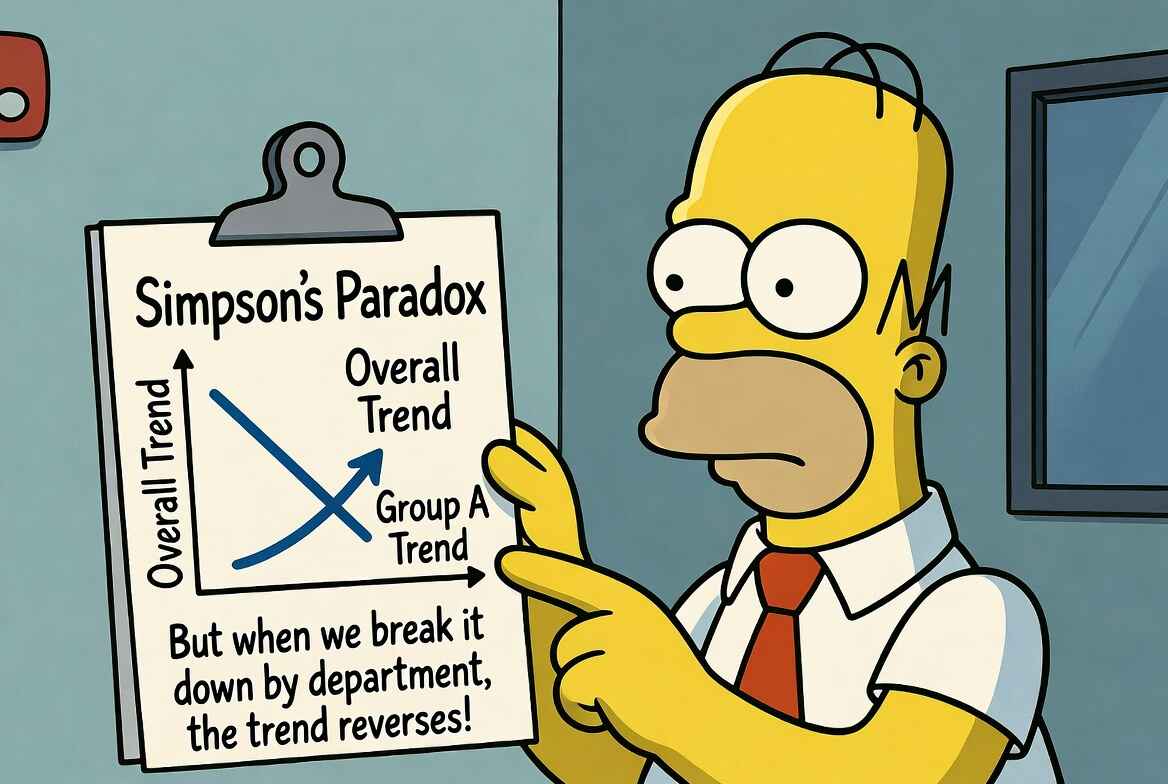

Simpson's Paradox on the Shop Floor: Segment Before You Decide

Same data, opposite conclusions. The roll-up blames Night; the segments show Night ahead. How Simpson's Paradox leads industrial engineers to make wrong conclusions.