Don’t Undermeasure: How Cheap Annotation Changes the Tempo of Decision-Making

When measurement is expensive, planning becomes unreliable; automating time‑study annotation lowers the cost of fresh data so that every decision can be grounded in facts.

Why Factories Live with Stale Data

Factories plan every day: takt and staffing, buy‑off timing, line balance, overtime, and lot sizes. The uncomfortable truth is that a surprising share of those plans rests on very little data, not because engineers prefer estimates but because converting the observations they already collect into trustworthy cycle times, MODAPTS codes, and work combination tables still takes hours upon hours of annotation. This leads to studies being rationed, oftentimes relying on old measurements, single-sample estimates, or just guesses.

The stakes rise during new‑product introduction and ramp‑up because the production system is still learning and planning. A lack of frequent, recent measurements often leads to missing more than 20% of potential throughput. Research on learning during ramp‑up shows the need to treat early builds as a sequence of experiments requiring timely, repeated data collection. Line‑balancing models and digital‑twin planning are only as useful as the data you put into them, which is why recent IEEE reviews of digital‑twin practice keep pointing to data quality and recency as being the #1 consideration for model accuracy. Finally, there is the statistical point that every work‑measurement text repeats: a single observation is just an anecdote; if you want reliability, you need to make multiple measurements of the same process.

The Core Innovation: Making Annotation Cheap

This is the problem Material Model solves. Many industrial engineers already record work processes on their phones, GoPros, or overhead cameras, then spend hours turning those clips into MODAPTS codes and SWCT timelines. With Material Model, you can upload these same clips (no special setup or fine-tuning), add plain English instructions on how the work should be handled, and get a fully annotated draft. This draft is close enough to correct that you can quickly edit to finish the job, or, in straightforward cases, is good enough from the get-go.

Upload → Add 2–3 plain‑English instructions → Get a draft in minutes → Edit → Export.

Edits are relationship-aware, so outputs stay coherent without extra effort: when an industrial engineer splits a motion into two elements, the downstream tables and synchronized timelines adjust; when a step is reclassified, station utilization and the SWCT are recomputed; when a duration is corrected, the MODs and totals reconcile across views instead of requiring the same change in multiple places.

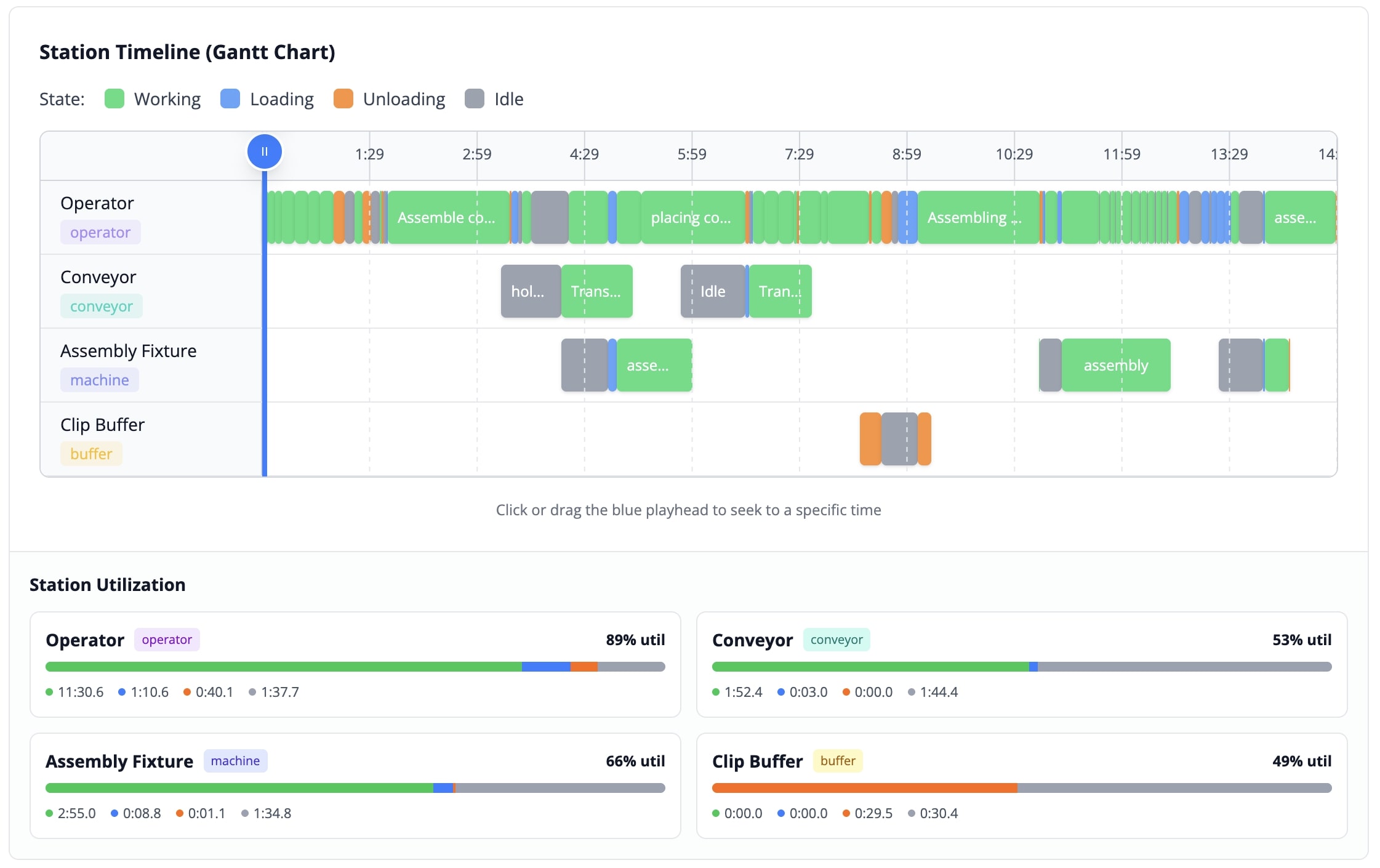

Outputs match the artifacts industrial engineers already use, which keeps adoption straightforward: SWCT produces synchronized, station-level timelines and utilization views that are useful during ramps and rebalancing; MODAPTS produces motion-level coverage with export-ready codes and MODs when doing method work; and everything in the app is editable and exportable as clean spreadsheets that drop into the same files the team already maintains. Teams that need system integration can use the API, but it is optional and not a prerequisite to be productive on day one.

The Technological Difference

Flexibility comes from using a vision‑language model (VLM) that, like their cousins large language models that power apps such as ChatGPT, can follow instructions and automatically handle real plant conditions, whether that means handheld phone footage, GoPro clips, overhead camera videos, or security feeds with uneven lighting, while also incorporating audio cues like tool chirps and machine tones to disambiguate similar‑looking steps. Unlike systems that demand per‑station calibration or rigid camera grids, our system can be guided with plain English instructions that take just a few moments to write.

Existing legacy systems rely on task‑specific models that expect the manufacturer to instrument the line, constrain the scene, and train a custom model before they show any value. We think this is the reverse of how it should be. Industrial engineers should start with the videos they already have, optionally add 2 or 3 plain‑English rules, and produce a first draft that reflects how the work runs today. We believe that technology should fit the industrial engineer, not the other way around, so there is no need to redesign the workflows; the work stays as video clips with brief notes and the spreadsheets the team already uses.

Since the core is an instruction-following annotation agent, the plant’s unique standards become rules to encode: when method sheets group motions differently, the pattern is described and drafts are produced in that format; when station-specific element definitions, expanded motion groups, or exclusion of quality checks from cycle time are required, those instructions are added and the system adapts without forcing changes to where the results ultimately land.

What Changes When Annotations Become Cheap

Example Gantt chart auto-generated from a video

When annotation becomes cheap the tempo of decision-making changes in visible ways: measurement stops being saved for gate reviews and becomes something teams do as soon as a question matters; engineers capture a few clips, some typical passes and some messy ones, add a few rules, review the draft, make one edit that propagates where it should, and end up with a useful result. With shared timelines and tables that everyone can review, disputes are resolved with data instead of opinions, problems are caught at two stations instead of twenty, and line rebalancing happens when needed because it no longer requires a weekend of manual coding to justify the changes.

Material Model does not replace the judgment of an experienced industrial engineer. It reduces the time from video to numbers by producing a first draft that can be corrected in minutes and exported into the templates already in use. The practical effect is fewer stale assumptions, fewer late debates over mismatched spreadsheets, and fewer nights spent on manual coding. Since we can process multiple clips in parallel, clearing a month’s backlog of studies becomes routine work instead of requiring overtime or consultants.

Browse the public examples of Material Model annotations at materialmodel.com to see what the outputs look like before uploading your own clips. When you’re ready to try it on real work, register at app.materialmodel.com/register where your account includes 600 free credits, enough for ten minutes of video processing. That’s sufficient to run a pair of five‑minute studies: upload the clips, add plain English instructions if needed, review and edit the draft, then use the results to make informed decisions about your line without waiting for the next review meeting.

Related Articles

Better Inputs, Better Factories: What We Learned About Measuring Work

Industrial engineers spend 4-14 hours weekly recording GoPro videos, writing MODAPTS codes, and hunting for missing minutes in their routings. Here is what we learned from IEs at Tesla, GM, and dozens of other manufacturers about how time studies actually get done.

When KPIs Go Wrong: Goodhart's Law for Industrial Engineers

KPIs that boil down to “just do it faster” create rework, hidden factories, and fake wins on dashboards. But there is a better way that can help align everyone from operator to supervisor with what the factory actually needs.

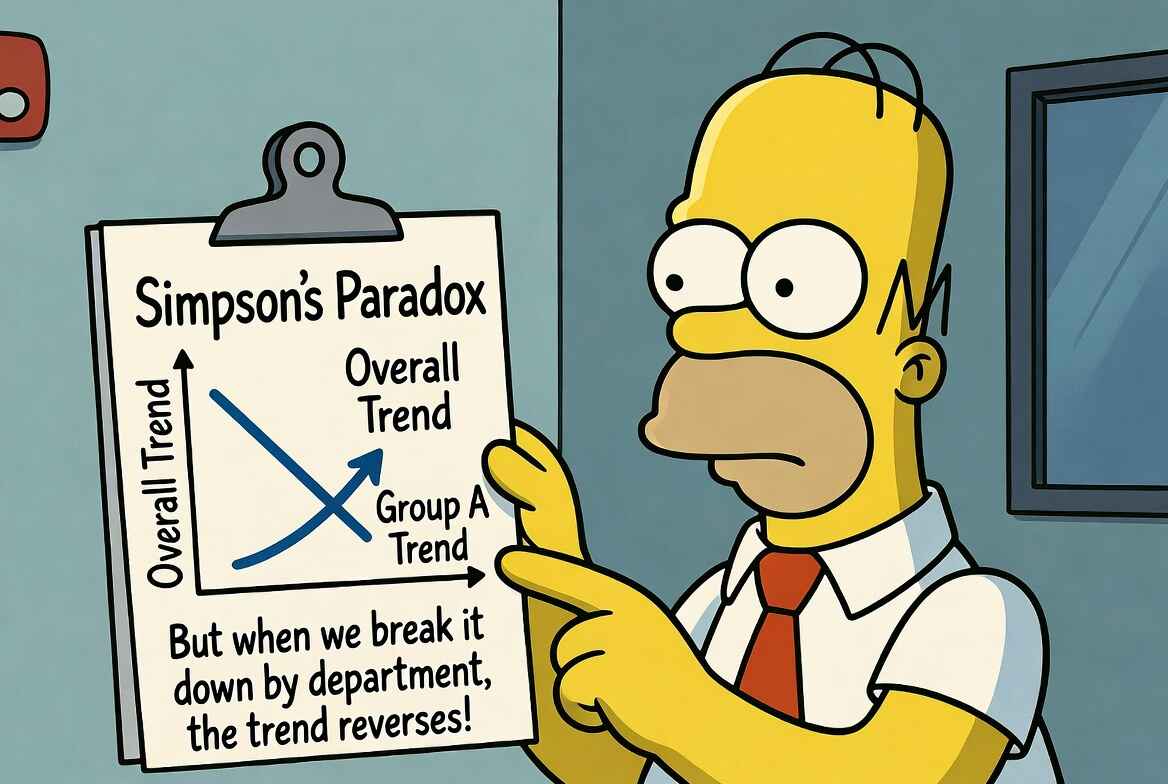

Simpson's Paradox on the Shop Floor: Segment Before You Decide

Same data, opposite conclusions. The roll-up blames Night; the segments show Night ahead. How Simpson's Paradox leads industrial engineers to make wrong conclusions.